Gabriela Riccardi talks to host Scott Nover all about how AI algorithms are affecting hiring in the season 5 finale of the Quartz Obsession podcast!

🎧 Listen right now or read the transcript

Meet your new hiring manager: She’s an algorithm.

Over the last decade, a growing industry of AI developers have started building algorithmic tools that promise to help companies make better hires than humans can. Hiring AI, vendors proclaim, can make data-based decisions, ones that are more objective than human judgment, more reliable than human error, and more impartial than human bias.

But real-world use cases reveal AI is a fickle recruiter. Take this oft-cited anecdote from Amazon, which from 2014-2017 trained its own proprietary tool on 10 years’ worth of resumes submitted to the company. Thanks to a male-dominated tech industry, though, most of those applications came from men—a fact the algorithm picked up on.

The result? The computer taught itself that male candidates were ideal—and became biased against women. In fact, any use of the word “women,” like a resume listing “women’s softball” or “women’s rights,” was docked points by the algorithm. The program couldn’t be debugged, and Amazon dropped the project.

But Amazon’s botched job is far from the only documented case of hiring algorithms behaving strangely. AI has been found to make all kinds of arbitrary judgments that keep job-seekers from getting hired—and in many cases, its decisions are discriminatory, too.

That hasn’t deterred software companies from pushing forward with tools they promise can beat bias. (In fact, even Amazon may be trying to build an algorithm all over again, according to a confidential report uncovered in 2022.) Flawed or not, AI is already coming for our job applications: More than 80% of employers quietly use it to make employment decisions, US Equal Employment Opportunity Commission chair Charlotte Burrows suggested in a hearing this year.

So pull up a chair at the algorithm’s interview desk—but don’t be surprised if your application is denied. Quartz compiled some of the strangest ways AI has been shown to screen out job candidates, ranging from the wild and weird to the erratic, the erroneous, and the blatantly biased. Inside the algo, there are endless reasons to be rejected.

👩 Your name is Ebony, not Emily

In one well-known 2004 experiment, researchers sent 5,000 resumes to more than 1,000 job postings across the US. The resumes were identical except for one difference: The name at the top was decidedly white-sounding (like Allison or Todd) or Black-sounding (like Aisha or Tyrone). And as the study revealed, the white-sounding candidates were 50% more likely to be offered an interview.

In 2016, researchers from Princeton University and the University of Bath replicated this finding in AI algorithms. They found that GloVe, a word-mapping AI, more readily associated white-sounding names with positive attributes. Once again, the AI was 50% more likely to extend interview offers to candidates with white-sounding names than Black-sounding ones.

👨 Or your name just isn’t Jared

Employment attorney Mark J. Girouard told Quartz in 2018 how one of his clients was vetting an AI-powered resume-screening tool, but wanted to see what the algorithm prioritized.

“After an audit of the algorithm,” reporter Dave Gershgorn writes, “the resume screening company found that the algorithm found two factors to be most indicative of job performance: their name was Jared, and whether they played high school lacrosse. Girouard’s client did not use the tool.”

📚 You interviewed with a bookshelf behind you

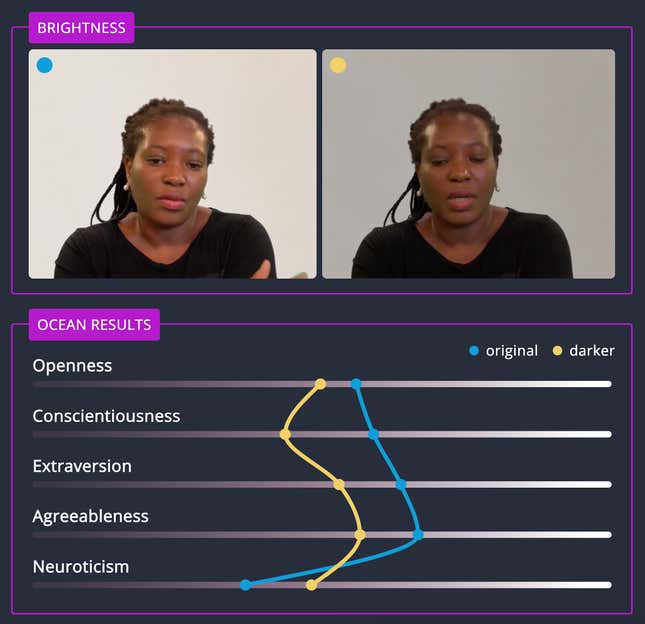

In 2021, a German public broadcaster tested a video interview startup powered by AI. The company claimed to be able to assess candidates across what’s known as the Big Five personality traits, commonly used in psychometric hiring assessments.

Candidates would record video answers to questions posed by the software, and the startup claimed its AI could analyze their voice, language, gestures, and facial expressions to generate the candidate’s personality profile. The AI would then recommend whether they should advance to the next round.

The broadcaster had actors and actresses interview with the software, then interview again with small adjustments to their clothes, camera, or background. When a bookshelf was added behind one actor, the AI’s read on his personality profile shifted: It ranked him as significantly less neurotic—and though that may sound like a good thing, higher neuroticism is considered a plus for some positions—among other supposed changes to his personality.

🥸 Or you sported glasses or a headscarf

Ditto on these, as per the broadcaster. When an actress put on glasses for a video interview, the AI knocked nearly 10 points off her conscientiousness score. When the same actress took off the glasses and put on a headscarf, she was found to be more open, more conscientious, and less neurotic. When another actress’s video was darkened, four of her five scores fell.

🇬🇧 You have an accent from Leeds or Liverpool or Cardiff

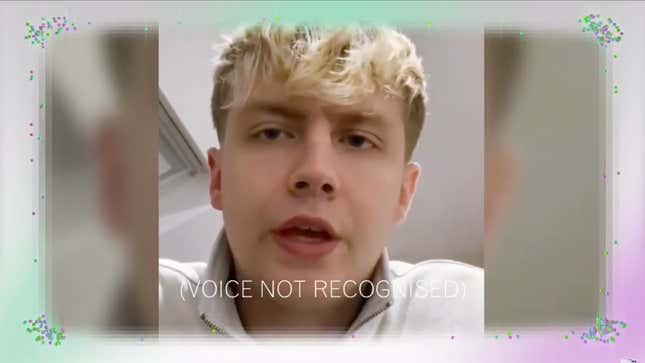

Another frequently used feature of video interview platforms relies on answer transcription. A video software will serve a candidate interview questions on camera; answers are recorded and transcribed for someone—or something—to review.

To see the impact of voice on transcription, BBC Three documentary Computer Says No (2022) had participants from different areas of the UK read the popular English football anthem “Three Lions.” (You might recognize its oft-shouted line: “It’s coming home!”)

But when some volunteers with regional accents read for the system, the AI revealed it couldn’t interpret their words. Instead, the transcription service labeled them as “[Voice Not Recognised].”

🇩🇪 But you’d be great if you only spoke German!

In 2021, journalists at the MIT Technology Review tested two AI-powered interview platforms, MyInterview and Curious Thing, by setting up a fake job posting on each tool and then applying for their posted role. The algorithm would supposedly score, among other qualifications, a candidate’s English proficiency.

Hilke Schellmann completed phone and video interviews with the AI software. On each platform, she interviewed twice: First, she answered questions as if she were undergoing a regular interview. On the second go, she answered questions by reading the Wikipedia entry for psychometrics in German.

The results? Curious Thing ranked her German interview as a 6 out of 9 for English competency, and MyInterview predicted the German-speaking version of Schellmann to be a 73% match for the faux role. The transcript system also interpreted German words as English, even if the readback didn’t make any sense. Here’s what the algo heard:

“So humidity is desk a beat-up. Sociology, does it iron? Mined material nematode adapt.”

🎓 Your degree comes from a Seven Sisters school

This is the case of the in-house Amazon algorithm, which was trained on existing resumes dominated by men and learned a bias against women in 2017. The AI explicitly de-ranked graduates of two women’s colleges, people familiar with the matter told Reuters. Perhaps even presidential candidates can’t lock down a job with AI.

👂 You have a hearing disability

In 2019, an employment specialist who works primarily with job-seekers who are deaf or hard of hearing had a client apply for a job with Amazon. The application required an interview through HireVue, an AI-powered video platform—and according to the specialist, offered no discernible means to accommodate hearing disabilities.

The specialist, who is deaf herself, attempted to call the company for accommodations, but couldn’t get through to help. Amazon only hired her client after the specialist and the applicant showed up on-site with an ASL interpreter in tow.

💬 Or perhaps a speech disability, or a visual impairment

While they didn’t point to documented cases, in 2022 the US Department of Justice and the Equal Employment Opportunity Commission issued joint guidance about how AI-based tools can be used to discriminate against workers with disabilities.

The DOJ, assistant attorney general Kristen Clarke said, was “sounding an alarm” on AI hiring. “We cannot let these tools become a high-tech pathway to discrimination,” added EEOC chair Charlotte Burrows. In 2023, the agencies released additional guidance about how AI used in hiring can violate civil rights.

One example of the dangers cited by the EEOC: Algorithms that screen out candidates because of their disabilities. For example, the agencies said, video interview software that evaluates applicants’ speech patterns “is not likely to score an applicant fairly” if that person has a speech disability, like a stutter, that causes differences in their speaking patterns. A chat-based tool trained to ask about employment gaps may reject candidates who had to take a career break for a health treatment. Or gamified tests that require applicants to pass a threshold—say, score 90% on a memory game—may not accommodate an applicant who is visually impaired but has all the memory abilities they need for the job.

🔥 Bonus: An AI firing special

In 2020, a group of UK makeup artists at MAC Cosmetics, owned by Estée Lauder, were told that the company would be undergoing layoffs. They were asked to reapply for their jobs by undergoing a video interview. That interview turned out to be with HireVue, whose algorithm would score their answers.

Rather than performing a makeup trial, as is standard procedure, the artists had to verbally describe their approach—say, in telling the computer how they would apply smokey eye makeup, rather than demonstrating it. Repeat: The artists had to demonstrate their makeup prowess using words instead of brushes.

The artists were all laid off in a decision partially based on the AI’s analysis. But all three described having high scores in the assessments they knew about, like their sales numbers or performance reviews. “My track record was gleaming, basically, and I exceeded expectations in everything else,” one of the women told the BBC. “The interview definitely raised some alarm bells to me.” According to the artists, no one at the company could tell them why they had been laid off.

“I literally thought we would be videoed and someone would mark it after. I found out that wasn’t the case,” another woman said. “Nobody saw the video. It was all algorithms.” After suing Estée Lauder for their firings, the artists reached an out-of-court settlement.