From placing a virtual piece of furniture in your living room to playing games or working out in the metaverse, the lines between virtual environments and the real world are increasingly blurred. And powering this digital revolution is an innovative tech stack comprising Augmented Reality (AR), Mixed Reality (MR), Virtual Reality (VR), and Extended Reality (XR).

Despite sharing the “Reality” suffix, every piece of immersive technology is different and useful in its own regard. This guide dives deep into the labyrinth of AR vs. MR vs. VR vs. XR — exploring every concept through a technical lens.

BeInCrypto Trading Community on Telegram: discuss the money of the future – cryptocurrencies, – with like-minded people, read reviews on the best crypto platforms & wallets, get technical analysis on coins & answers to all your questions from PRO traders & experts!

- Why discuss AR vs. MR vs. VR vs. XR in 2023?

- What are the four elements of VR?

- Understanding Augmented Reality (AR)

- Unraveling Mixed Reality (MR)

- Unpacking Extended Reality (XR)

- Is XR a mix between AR and VR?

- Honorary mentions

- AR, MR, VR, and XR compared

- A new dawn of technological realities

- Frequently asked questions

Why discuss AR vs. MR vs. VR vs. XR in 2023?

Apple’s ambitious launch announcement of its Vision Pro — a spatial computing product — has reignited the AR-VR discussion. Despite a novel immersive technology powering it, commentators automatically tag the Vision Pro as an AR-VR headset. Yet the boundary-pushing accessory is much more than augmented and virtual reality.

In fact, with spatial computing in play, this piece of tech is closer to what we term Extended Reality — an umbrella term comprising AR, VR, and MR.

Keeping the not-so-factually-accurate monikers in mind, we are here to quell the AR vs. MR vs. VR vs. XR confusion once and for all. This guide will explain each concept and its fully immersive experiences in the most simple possible way.

Making sense of VR

What is VR?

VR or Virtual Reality stimulates a virtual environment for the users. The idea with this piece of immersive technology is to place a user within an experience rather than bringing the experience to them.

Here is a simple way to understand it.

Imagine you suddenly find yourself standing on Mars instead of in your room. This visual representation of a Mars-like environment is virtual reality. Living VR experiences require users to wear specialized VR headsets.

How does VR work?

Key components

Virtual Reality takes you to a digital world that’s not real. The creation of that world is an interplay of several underlying yet critical technologies involving headsets, motion tracking, VR software, haptic feedback, and more.

While VR headsets act like high-definition eye-centric screens offering a stereoscopic effect for depth perception, motion tracking is the tech that allows the user to interact with the virtual environment. Even though haptic feedback isn’t a must for experiencing virtual reality, it does improve the user experience by offering a sense of touch or even tactile feedback.

What are the four elements of VR?

Like any other immersive technology, Virtual Reality has many underlying elements. These include tracking systems, user-interface, real-time rendering, and more. However, four of the most important VR elements include:

- The virtual world: This can be a metaverse or any digital space that works outside the real-world environment. Metaverse seems more obvious as the likes of Axie Infinity, Sandbox, and more have incentives and crypto assets linked to them.

- Sensory feedback: This element involves additional sense-based feedback meant to enhance the quality of immersive experiences. For instance, haptic feedback enhances your sense of touch.

- Interactivity: This element determines the degree of interaction between the user and the digital world. The best resources to increase interactivity include handheld controllers, motion-tracking setups, and more.

- Immersion: This element identifies how engrossed the user is with the VR setup. A higher degree of immersion qualifies the VR as a better one.

VR software development

Each component that we discussed requires a software layer to work in cohesion. A simple way of understanding VR software is to consider it the driving force behind the virtual environment. The VR software processes the information, handles real-time information processing, adjusts the in-headset experiences for the user, and works closely with the rendering engine.

Did you know? It is the rendering engine that takes the 3D view of the digital word created by VR and projects it as 2D. It even adds shadows, lights, and effects to the 2D view to make it more appealing to the user. The rendering engine does all that at 90fps or higher to ensure that the user doesn’t experience motion sickness.

Working prototypes/products

Gadgets like the Oculus (now Meta) Rift, PlayStation VR, and HTC Vive are some of the existing, more successful interpretations of virtual reality. While the Rift made low-latency (lag-free) experiences possible, Vive went heavy on motion tracking, and PlayStation made gaming VR mainstream. Notably, Apple’s ambitious and upcoming Vision Pro does have VR elements, including eye-tracking, spatial audio, a 3D UI, and more.

Technological advancements in VR

A lot is happening in the VR space, and quickly. While the tech elements of Virtual Reality, including haptic feedback, motion tracking, and more, are continuously being improved, the number of institutional apps pairing VR with remote collaboration and training is also increasing.

VR hardware has become easier to use and even more affordable, with the likes of Quest 2 from Oculus (now Meta) now available for under $300. And that’s not all. Recent VR-specific developments are also focusing on cross-platform VR apps that will allow companies to have the same software solution under different headsets.

Understanding Augmented Reality (AR)

Imagine seeing your favorite superhero sitting on your bed or standing near the window, all in an experience that keeps the elements of your room intact. This is what AR is like.

What is AR?

This immersive technology is all about overlaying a digital entity atop the real-world environment. With AR, you can make changes to the world in front of you, adding superheroes or other elements to it.

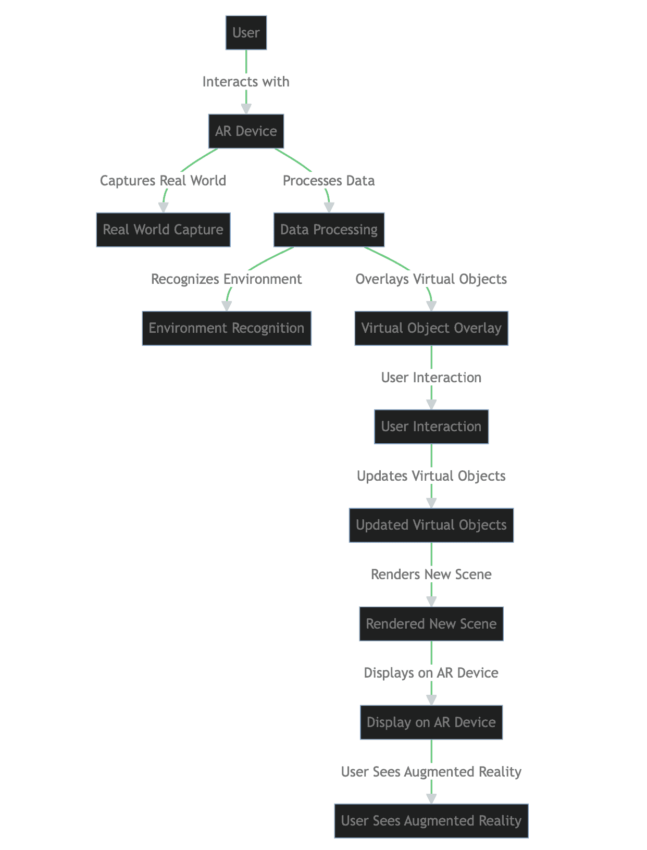

How does AR work?

Unlike VR, AR is more accessible. AR relies on devices like tablets or smartphones to study and understand the environment. Once done, virtual elements are placed according to requirements. Even AR works closely with a host of components, including AR glasses and camera sensors. Let us understand them a little better.

Key components

In AR, the cameras and associated sensors capture the data, scanning the environment in the process. You can try this out yourself. Simply head over to an e-commerce store like Amazon to buy a home appliance or furniture. You will have the option to see how the device looks inside your room. First, you will have to scan the room using Amazon’s built-in UI that uses the camera and identifies surfaces.

Gauging spatial depth and orientation using depth sensors, gyroscopes, and other hardware components is how AR functions.

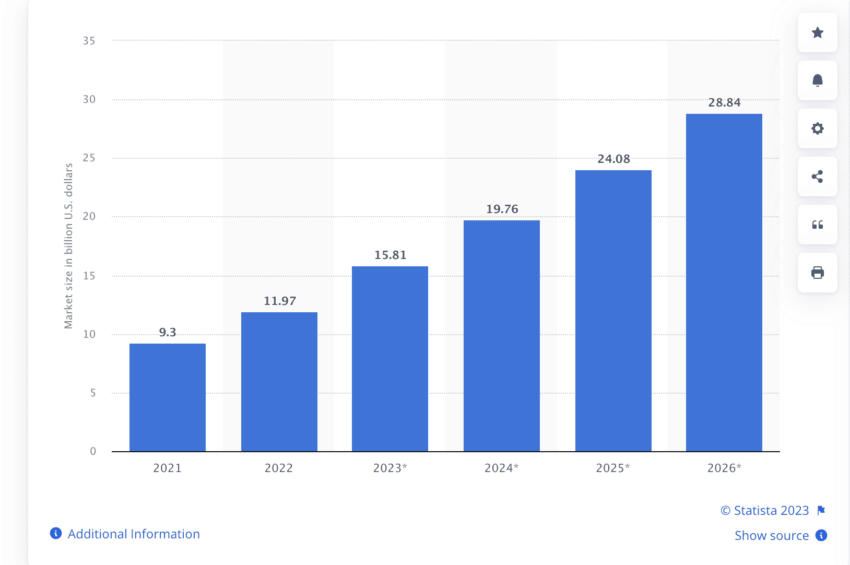

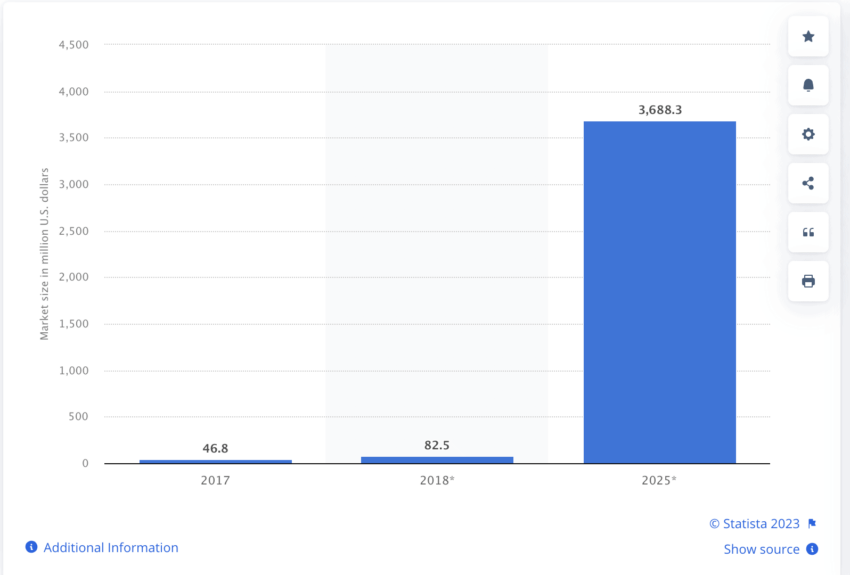

Did you know? According to Statista, the number of AR devices is set to go as high as 1.73 billion by 2024. Source

What about the software bit?

While sensors help AR receive data, processing happens on the client side, where software modules are placed. AR-specific Software Development Kits (SDKs) include ARCore, ARKit, and more. A lot goes under the hood, but a simple way of understanding is as follows:

The cameras process info and share it with the AR client, where the heavy lifting or processing happens. Once done, the digital overlays are displayed on the concerned medium — smartphone, AR glasses, or directly into the eye.

Working prototypes/products

The HoloLens2 from Microsoft, Google Glass (edition 2) from Google, Magic Leap One, and more have taken the practical applications of AR to a whole new level. However, in 2023, development has ramped up in the AR space, which makes us optimistic about its future.

From normalizing avatar-based interactions to drawing in AR courtesy of apps like SketchAR to developing some intuitive mobile AR resources like location anchors and more, there seems to be no stopping AR. Also, AR has the potential to replace video calls by making holographic communication possible.

But first, AI needs to scale past certain challenges:

Technological advancements in AR

A few supplementing technologies are slowly growing, making AR look much more innovative in 2023. These include Simultaneous Localization and Mapping (SLAM) for improving the understanding of diverse environments, AR cloud in the form of spatial mapping, and more. Regardless of the name, every piece of tech associated with AR aims to make the interactive experience as real as possible.

“If you zoom out to the future and look back, you’ll wonder how you led your life without augmented reality.”

Tim Cook, CEO at Apple: Twitter

Unraveling Mixed Reality (MR)

What is MR?

MR or Mixed Reality is more of an AR-VR amalgamation, allowing you to experience the best of both worlds. Imagine a gaming universe that transitions into your living room at will, with characters moving seamlessly from one reality to another.

How does MR work?

MR works by bringing several underlying pieces of tech under one hood. It all starts with the MR headset — more like a HoloLens, Quest 3 from Meta, or a Magic Leap that offers something more than just VR and AR. These headsets scan environments; the built-in software creates virtual digital objects and interactions according to user preferences. To make MR work, technologies like spatial mapping, computer vision, and 3D modeling need to work together.

In simple terms, MR aims to make your life easier, allowing you to control scenarios and spaces better. Suppose you are wondering how interaction with digital objects in a real space happens, like having a virtual keyboard placed in front and you typing the password in the air. In this case, there are several technologies in play. Some of these include gesture recognition, eye tracking, haptic feedback, and hand tracking.

Working prototypes/products

Regarding products and prototypes, Microsoft’s HoloLens 2 is one of the leading options on the market today. For a comprehensive spatial computing experience, the Magic Leap 1 is a good choice. Also, Varjo XR-3 happens to be one of the more evolved MR headsets, offering photorealistic fidelity regarding visuals, insane levels of depth awareness, resolution comparable to the human eye, and more.

Technological advancements in MR

Magic Leap’s Magicverse seems like one of the better implementations of mixed reality. However, if you look at the broader picture, here are some of the advancements that might give MR the legs to grow and evolve further.

Advances in spatial mapping

Spatial mapping, as part of spatial computing, is getting the attention it deserves, thanks to the Vision Pro announcements. This immersive technology will assist MR apps in better-detecting surroundings and taking care of depth and other related metrics.

Improvements in the spatial mapping space might be beneficial if, for example, you plan on doing some home workouts in a restricted space. Imagine accessing a virtual battle rope with haptic feedback and hand tracking to burn calories.

Development of real-time 3D object rendering

The quality of MR replications can improve with better real-time object rendering support.

Imagine sitting at a cafe with the headset on, and the MR app scans the entire place to add a few quick virtual objects to your table. That could be a small screen for viewing a movie or a small notepad you can scribble on using hand gestures.

Integration with AI and machine learning

MR working closely with AI can be an insanely powerful combination. The rendered objects placed in front of you could learn from your preferences and surroundings. Here is a fictional scenario:

Remember the cafe example we gave earlier? With AI and ML traits built in, you can achieve much more than just rendering. With AI-driven object recognition, you can train the cameras on your headset to copy information from a physical document on your table and transfer the same onto the virtual notepad you have open. Also, with ChatGPT growing in leaps and bounds, integrating the chatbot with the MR headset for quick query resolution could be next on the agenda.

Another instance could be the cafe becoming crowded all of a sudden. That way, the headset can intelligently adapt to the environment, activating noise cancellation and improving spatial audio sound. And if the sunlight coming inside the cafe changes, the transparency levels of the open screens also change automatically. These are some of the hypothetical use cases that might soon become realities.

Unpacking Extended Reality (XR)

What is XR?

XR, or extended reality, is more like an umbrella term that encompasses all of the immersive technologies and experiences we have mentioned. XR was envisioned as a concept as the lines between VR and AR, and MR are expected to blur in the years to come. Simply put, XR is an immersive technology that aims to combine AR, VR, and even MR dynamically and fluidly.

How does XR work?

On the surface, XR works by making it possible to switch between AR, VR, and MR, on-demand or per the perceptive abilities of the device itself. Let us now revisit the cafe scenario from earlier to understand how XR might work.

Imagine you are wearing an XR headset. When the cafe is crowded, and you have no need to interact with anyone inside, the headset moves you to a focused VR mode, say a forest. That blocks out any connection to the real world as you work. You can even have a notepad nestled within the forest.

Now imagine that you need to work with some actual physical documents. You can switch over to the AR mode, drag the content of a notebook in front of you, and start reading or editing it, as required. Finally, if you want a more immersive experience with gesture support, spatial mapping, hand tracking, or more, the MR mode can be activated.

All of that comprises the world of extended reality. But there is more.

Technological advancements in XR

Extended reality would be best served with IoT-based integrations — a feature that would allow your headset to connect with your fitness tracker, receiving regular updates regarding heart rate and other metrics. In case your heart rate rises up while you are stressed, the headset might suggest or shift to a more pleasant VR mode.

Another impactful sub-tech that can improve XR apps and resources is the use of audio raytracing. When used with XR, this technology can enhance the sense of immersion and presence. For instance, if you are playing a VR game that requires you to go inside a cave, audio raytracing can help replicate the exact sound of a real cave, adding to the immersive experience.

Audio raytracing can work wonders alongside spatial audio — the go-to audio tech to power Apple’s Vision Pro. As spatial audio identifies with 3D acoustic interactions, it can work with audio raytracing to amplify the overall experience.

“One aspect of #VisionPro that I think people haven’t fully been able to appreciate is the advanced Spatial Audio system. It’s insane, and the whole device is really the most advanced consumer electronics device ever created.”

Sterling Crispin, former researcher at Apple: Twitter

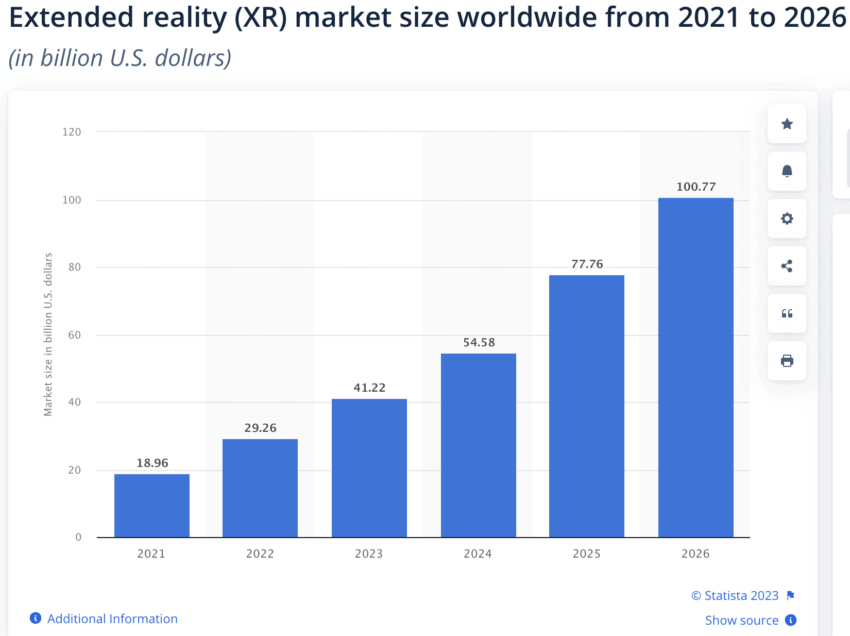

With AR and VR becoming mainstream, gaming metaverses growing in stature, and NVIDIA working on perfecting its Omniverse as a future of digital space sharing, Extended Reality adoption might be nearer than we think.

Is XR a mix between AR and VR?

Due to similar use cases and the ability to work with virtual elements, XR or Extended Reality is often thought of as an amalgamation of AR and VR. It is more.

In “XR,” x works as a variable that can fit in as many technologies to make it perfect. Yes, AR and VR have a role to play, but the world of extended reality also includes spatial audio, spatial computing, audio ray tracing, IOT, and much more. XR brings in a family of realities, giving the user complete control of which one they want to be in.

Honorary mentions

Any discussion focussing on VR vs. AR vs. MR vs. XR would be incomplete without the mention of some other immersive technologies. Here are a few areas of note:

Spatial computing

This computing technology allows users to interact with any three-dimensional physical space, combining AR, VR, and MR. Here is a possible use case for understanding spatial computing better.

Imagine you are an architect and wearing an MR or XR headset. With spatial computing support built-in, you can pull up a three-dimensional architecture and superimpose it onto the actual working site. This way, you can better visualize and inspect every layout aspect correctly.

In addition to spatial computing, technologies like Holography and Computer Vision can readily enhance user experiences associated with AR, VR, MR, and XR implementations. With Holography support built-in, headsets should be able to project pathways and anatomies, whereas Computer Vision support can help with object identification, helping with warehousing, inventory management, and more.

That sums up our VR vs. AR vs. MR vs. XR comparison. As you can see, every immersive technology we discussed aims to enhance user productivity.

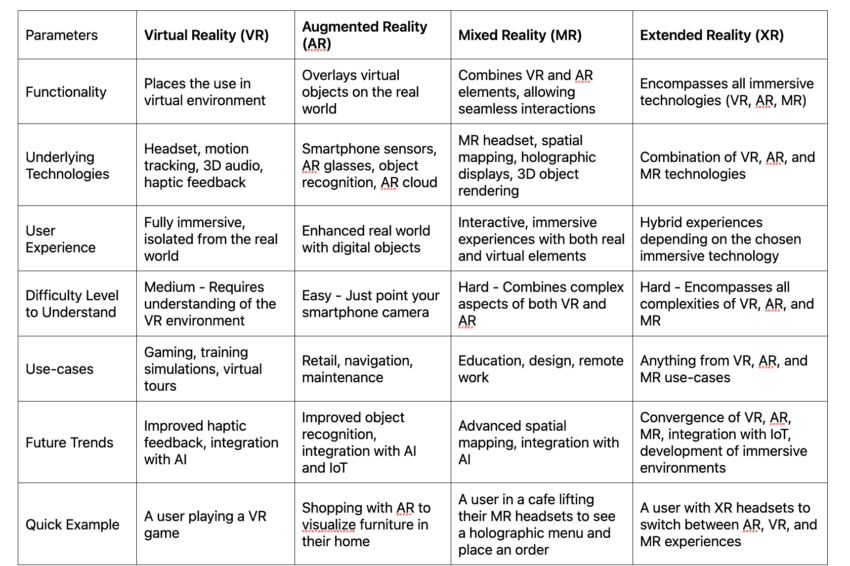

AR, MR, VR, and XR compared

If you are pressed for time, here is a quick comparison table to help you identify the VR vs. AR vs. MR vs. XR distinctions more succinctly:

A new dawn of technological realities

Reality is warped. You can look at and interact with it in any way you see fit. In comparing VR, AR, MR, and XR, this guide shows that each kind of immersive technology aims to make a user’s life easier and more enhanced in a specific way. And the beauty of all that is that we can readily mold and combine these technologies to have an era-changing effect on how humans lead their lives. So the reality of the new “Reality” is all about malleability and flexibility. But we’re just in the infancy of this innovative technology. If we can say anything for certain, it is that there is more to come.

Frequently asked questions

What is the difference between AR and mixed reality?

What can you do with a mixed-reality headset?

Does mixed reality require a headset?

What are the three types of virtual reality?

Trusted

Disclaimer

In line with the Trust Project guidelines, the educational content on this website is offered in good faith and for general information purposes only. BeInCrypto prioritizes providing high-quality information, taking the time to research and create informative content for readers. While partners may reward the company with commissions for placements in articles, these commissions do not influence the unbiased, honest, and helpful content creation process. Any action taken by the reader based on this information is strictly at their own risk. Please note that our Terms and Conditions, Privacy Policy, and Disclaimers have been updated.