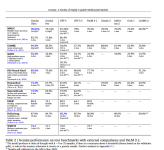

Google claims Gemini beats GPT-4 in "30 of the 32 widely used academic benchmarks."

See full article...

See full article...

Interesting choice to have the threads come out on the right the same as they go in on the left. Great signifier for activity without progress, though.Looking at the logo image, it seems that Google has really taken a page out of Apple's branding playbook. I could easily see this as a slide at an Apple presentation. Except for the color gradient in the letters, perhaps.

Looking at the logo image, it seems that Google has really taken a page out of Apple's branding playbook. I could easily see this as a slide at an Apple presentation. Except for the color gradient in the letters, perhaps.

I think the Apple vibe is coming from being on a dark background. Normally Google favors a white background in presentations and logos. If I picture it in the inverse it doesn't seem "clean" enough for an Apple logo, and looks much more Google-esque in my mind at leastLooking at the logo image, it seems that Google has really taken a page out of Apple's branding playbook. I could easily see this as a slide at an Apple presentation. Except for the color gradient in the letters, perhaps.

Interesting choice to have the threads come out on the right the same as they go in on the left. Great signifier for activity without progress, though.

Right. But why is it palindromic? Looks like stuff comes in one end, gets mushed about, then the exact same thing exits via stage right?

Interesting, but not surprising, it will only work on Google platforms.

At this point, I just wish that they would log every piece of generated text and sell it to Universities/Turnitin so we could help cut down the absolute DELUGE of generated mediocrity. So many faculty have no idea how to handle this, and the students are not only generating bad work, but are hamstringing themselves pretty hardcore.

It's an interesting problem.No solution, btw. This is what obsolescence of a model of "knowledge" looks like. An epistemic paradigm shift. Buckle up!

This is happening all across grade school as well. And the system is not set up for teachers in, say, 9th grade, to screen, and so it's ... just happening. Homework assignments are all meeting spec. No one knows exactly what to do. This from my friend in the NYC school district. Deer in headlight.

In college it is of course the same but doubled by the cleverness of the students, and how tricks spread through student bodies. Large scale denial.

So, it is bad right now. And no solution anyone in education gets paid enough to toil over.

Next year will be this year +1, next year +2, and so on. Students coming into first year college classes in 2025 will have a +2 AI off-load factor. 2026, +3, etc.

We have no broad-spread idea what this is going to do to cognitive development, and what pedagogy needs to now look like.

Buckle up indeed. Everyone reading this article is old tech. In 5 years, what will a college student look like? A high schooler?

Whelp, I guess their panic rush solution of Bard is dead/dying then. After that botch job why exactly should we believe them on this one, or why would anyone want to use it when it might get killed just as fast?

So Google admits that Gemini is still worse than ChatGPT right now, but sometime next year, the "Ultra" version might me fractionally better than what ChatGPT is now. Cool. Great.

I mean, it's absolutely not a solution, but you would be surprised how much of absolute trash we're currently swamped in that it would gut.No solution, btw. This is what obsolescence of a model of "knowledge" looks like. An epistemic paradigm shift. Buckle up!

This is happening all across grade school as well. And the system is not set up for teachers in, say, 9th grade, to screen, and so it's ... just happening. Homework assignments are all meeting spec. No one knows exactly what to do. This from my friend in the NYC school district. Deer in headlight.

In college it is of course the same but doubled by the cleverness of the students, and how tricks spread through student bodies. Large scale denial.

So, it is bad right now. And no solution anyone in education gets paid enough to toil over.

Next year will be this year +1, next year +2, and so on. Students coming into first year college classes in 2025 will have a +2 AI off-load factor. 2026, +3, etc.

We have no broad-spread idea what this is going to do to cognitive development, and what pedagogy needs to now look like.

Buckle up indeed. Everyone reading this article is old tech. In 5 years, what will a college student look like? A high schooler?

Where is Amazon in all this? Are they not participating in the race? Are they in stealth? Am I just missing the coverage? Are they just so far behind that no one cares yet? A quick web search suggests the last is true.For now, Google hopes that Gemini will be the opening salvo in a new chapter of the battle to control AI assistants in the future, opposing firms like Anthropic, Meta, and the in-tandem duo of Microsoft and OpenAI.

Gemini is also excellent at inflating Google's PR language—if the models were any less capable and revolutionary, would the marketing copy be any less breathless? It's doubtful.

Man...combined with the fact that late Gen Z and Gen Alpha were raised with iPads which have been linked to cognitive issues with younger kids*, it's worrying to say the least. Over-reliance on technology is going to be a very big issue in the next decade (or less) as they graduate and enter adulthood.No solution, btw. This is what obsolescence of a model of "knowledge" looks like. An epistemic paradigm shift. Buckle up!

This is happening all across grade school as well. And the system is not set up for teachers in, say, 9th grade, to screen, and so it's ... just happening. Homework assignments are all meeting spec. No one knows exactly what to do. This from my friend in the NYC school district. Deer in headlight.

In college it is of course the same but doubled by the cleverness of the students, and how tricks spread through student bodies. Large scale denial.

So, it is bad right now. And no solution anyone in education gets paid enough to toil over.

Next year will be this year +1, next year +2, and so on. Students coming into first year college classes in 2025 will have a +2 AI off-load factor. 2026, +3, etc.

We have no broad-spread idea what this is going to do to cognitive development, and what pedagogy needs to now look like.

Buckle up indeed. Everyone reading this article is old tech. In 5 years, what will a college student look like? A high schooler?

Man...combined with the fact that late Gen Z and Gen Alpha were raised with iPads which have been linked to cognitive issues with younger kids*, it's worrying to say the least. Over-reliance on technology is going to be a very big issue in the next decade (or less) as they graduate and enter adulthood.

*I am aware that the study concludes that it is the quality of the content versus the quantity, but the issue is that giving a kid a tablet is almost always going to result them in watching overstimulating garbage on youtube with ads pushed by algorithms.

We argue that the effects of screen viewing depend mostly on contextual aspects of the viewing rather than on the quantity of viewing. That context includes the behavior of adult caregivers during viewing, the watched content in relation to the child’s age, the interactivity of the screen and whether the screen is in the background or not. Depending on the context, screen viewing can have positive, neutral or negative effects on infants’ cognition.

A company touting benchmarks is a red flag, too. Makes you wonder how much they were trying to game the benchmarks. It's an LLM for crying out loud. If there's any time to let your product do the talking, it's now.When you see a super corporate polished video, where a bunch guys pat themselves on the back, and no single demo snap of the actual product in it, you can smell a rat miles away.

Anyway, good attempt google. I feel bad for Demis Hassabi, who's been teasing Gemini hard recently but still playing catch me if you can vs ChatGPT.

Whew, good thing I raised my young'uns with Kindles!Man...combined with the fact that late Gen Z and Gen Alpha were raised with iPads which have been linked to cognitive issues with younger kids*, it's worrying to say the least. Over-reliance on technology is going to be a very big issue in the next decade (or less) as they graduate and enter adulthood.

*I am aware that the study concludes that it is the quality of the content versus the quantity, but the issue is that giving a kid a tablet is almost always going to result them in watching overstimulating garbage on youtube with ads pushed by algorithms.

Yep - as someone who does a very limited amount of university teaching, at the moment the ai generated assignments I've seen currently range from utterly garbage hallucinated bullshit to mediocre scraping through, but 5 years from now really worries me.So, it is bad right now. And no solution anyone in education gets paid enough to toil over.

Next year will be this year +1, next year +2, and so on. Students coming into first year college classes in 2025 will have a +2 AI off-load factor. 2026, +3, etc.

We have no broad-spread idea what this is going to do to cognitive development, and what pedagogy needs to now look like.

Buckle up indeed. Everyone reading this article is old tech. In 5 years, what will a college student look like? A high schooler?

And “Not reading the sponsored content inevitably injected into the responses is a violation of the Terms of Use…”With AD'S!